Photonic AI Accelerator for energy-efficient High-Performance Computing and real-time AI Applications available in a 19″ rack-mountable Server

The Q.ANT Native Processing Unit NPU, the first commercially available photonic processor, sets a new era for energy-efficient and accelerated AI and HPC. By using the natural properties of light, we realize basic AI functions purely optically – without electronic detours. This is why we call it Native Computing. Here are the potentials setting photonic computing apart from conventional computing:

Seize the exclusive opportunity to experience Q.ANT’s first commercial Photonic AI Accelerator, promising to set new standards in energy efficiency and computational speed. Test, innovate, and get hands-on with a technology that promises a sustainable and powerful future. Redefine the possibilities of AI processing – where cutting-edge efficiency meets the brilliance of light.

The Native Processing Server NPS as 19″rack-mountable server with our photonic NPU PCIe card is designed specifically for AI inference and advanced data processing. Its Plug & Play system design makes it ready to be integrated in data centers and HPCs for immediate access to photonic computing. The NPS is upgradable with additional NPU PCIe cards for even more processing power in the future.

| System / Subsystem | Feature |

| System node | x86 processor architecture; based 19” 4U commercially available rack system |

| Operating System | Linux Debian/Ubuntu with Long-Term Support |

| Network interface | Ethernet with up to 10 Gbit speed |

| Software interface | C / C++ and Python API |

| API to subsystem | Linux device driver |

| Native Processing Unit NPU |

|

| Power consumption of NPU | 45 W |

| Photonic integrated circuit (PIC) | Ultrafast photonic core based on z-cut Lithium Niobate on Insulator (LNoI) |

| Throughput of NPU | 100 MOps |

| Cooling of NPU | Passive |

| Operating temperature range | 15 to 35°C |

Each milestone brings us closer to unlocking the full potential of Photonic Computing: dramatically faster perfomance at a fraction of the energy.

Operation speed

Our system offers the potential of an exponential growth in operational speed, which is supposed to accelerate from 0.1 GOps in 2025 to 100,000 GOps by 2028 – marking a million-fold increase in five years.

Energy efficiency

Photonics saves energy while working. For AI, photonic processors promise 30x less energy consumption on hardware level than digital processors (transistors, CMOS).

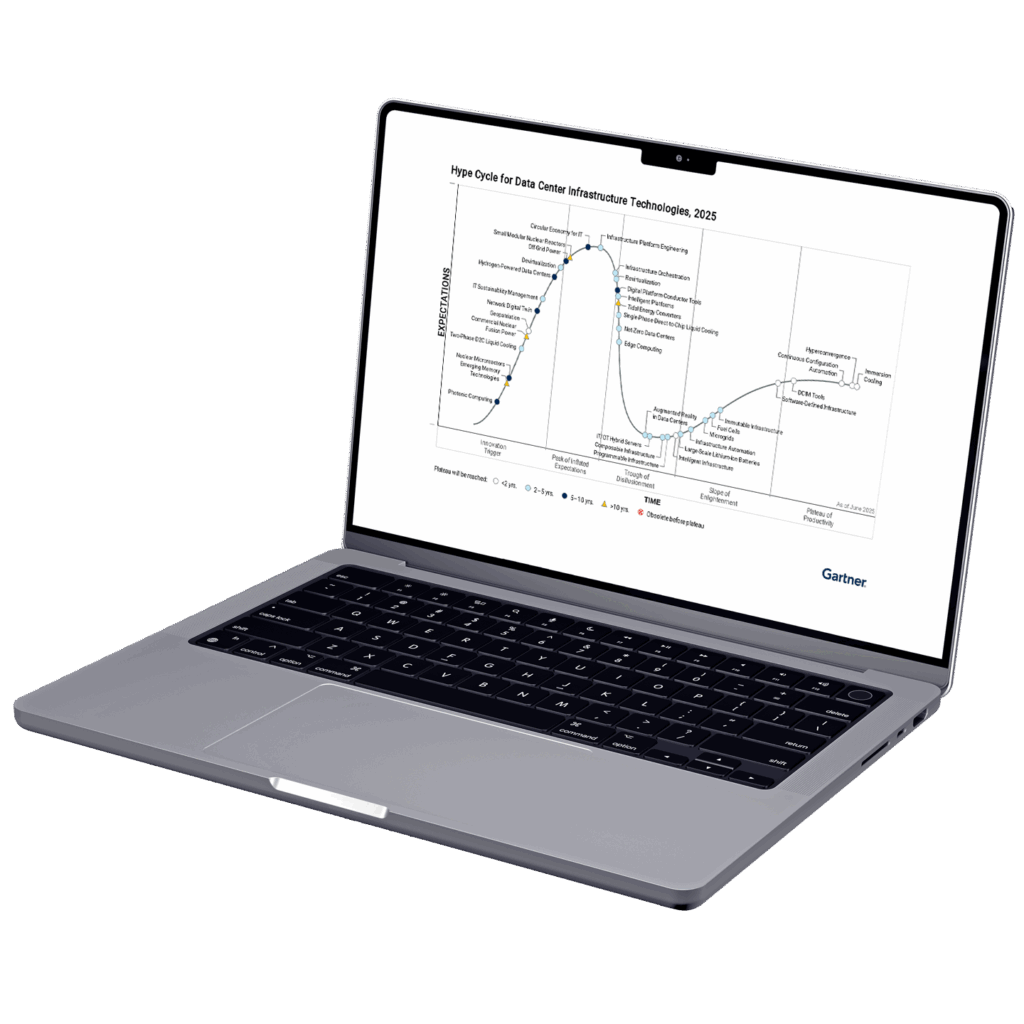

We provide complimentary access to the Gartner® Hype Cycle™ for Data Center Infrastructure Technologies 2025 report. Learn how Photonic Computing can enable energy efficient data centers in a future of rising AI and HPC.

As an analog computing unit, the NPU enables the solution of complex, non-linear mathematical functions that would be too energy-intensive to calculate on conventional processors. Initial applications are in the field of AI inference and AI training, paving the way for efficient and sustainable AI computing. Start programming the Q.ANT NPU using our custom Software Development Kit, called the Q.ANT Toolkit. This interface enables users to operate directly at the chip level or to leverage higher level optimized neural network operations, such as fully connected layers or convolutional layers to build your AI model. The Toolkit offers a comprehensive collection of example applications that illustrate how AI models can be programmed. These examples can be used directly or as a foundation for creating own implementations.

| Name | Description | Programming Language |

| Matrix Multiplication | Multiplication of a matrix and a vector | Python / C++ |

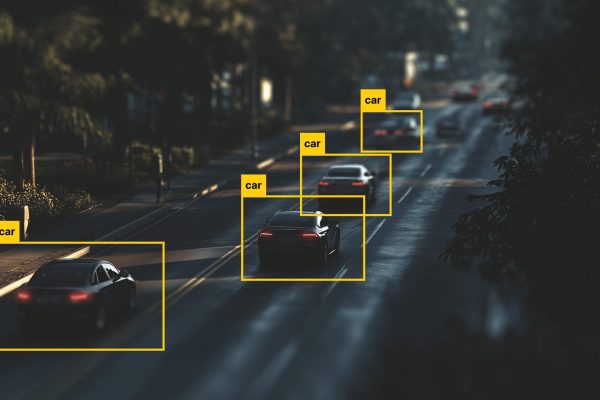

| Image Classification | Classification of an image (e.g. based on the ImageNet data set) | Python (Jupyter) |

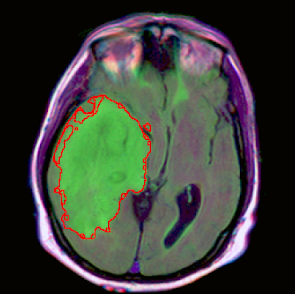

| Semantic Segmentation | Segmentation of an image (e.g. based on a brain MRI scan data set) | Python (Jupyter) |

| Attention-based AI models (coming soon) | e.g. speech recognition | Python (Jupyter) |

U-Net for cancer detection in brain MRI scans running on a Native Processing Unit (NPU)

Q.ANT’s Native Processing Server, a photonic analog processor, is solving complex, real-world AI computations such as image recognition and segmentation tasks. From executing ResNet for object recognition in images to applying U-Net for identifying cancer regions in brain MRI scans: The Native Processing Server handles billions of operations with 99% consistency to conventional digital computation, demonstrating the viability of photonic analog computing.

Photonic Computing enables faster execution of matrix operations and nonlinear functions directly in hardware. This reduces latency and allows for more efficient model architectures with fewer parameters. The result: higher throughput and lower power consumption for both training large-scale models and real-time inference.

Many image processing tasks are inherently mathematical relying heavily on transforms like Fourier or convolution operations. With photonic processors, these operations can be performed optically, in parallel and at the speed of light. This dramatically increases frame rates and lowers energy usage.

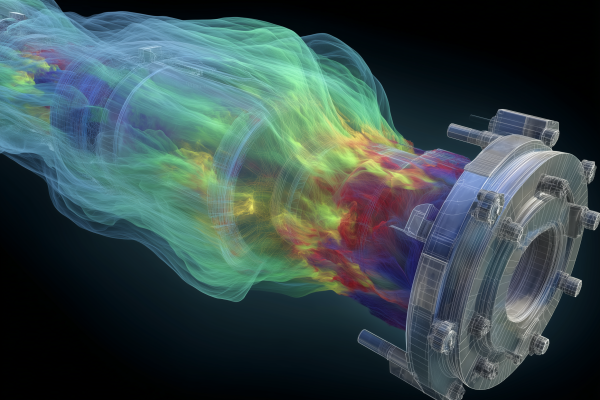

Scientific simulations often depend on solving complex partial differential equations and large-scale matrix systems. Photonic hardware provides a powerful platform for these workloads by enabling high-bandwidth, low-latency computing that scales with problem complexity, helping simulate physical phenomena faster and with greater efficiency.

Thin Film Lithium Niobate on Insulator TFLNoI – The Optimal Material Choice for Photonic Integrated Circuits PIC. Q.ANT relies on our proprietary material platform for making the photonic chips inside the NPU. The central components in the PIC are optical waveguides, modulators and various other building blocks, which enable high-speed and precise control of light, all integrated in a single chip at nanoscopic level. In this chip, a very thin layer of lithium niobate is bonded on a silicon wafer, on which the photonic components are fabricated. We believe that TFLNoI is the key to the future of photonic computing.

PICs based on TFLNoI show several main advantages:

SVP Native Computing

I look forward to discussing the potentials of Photonic Computing with you.

Q.ANT GmbH

Handwerkstr. 29

70565 Stuttgart

Germany

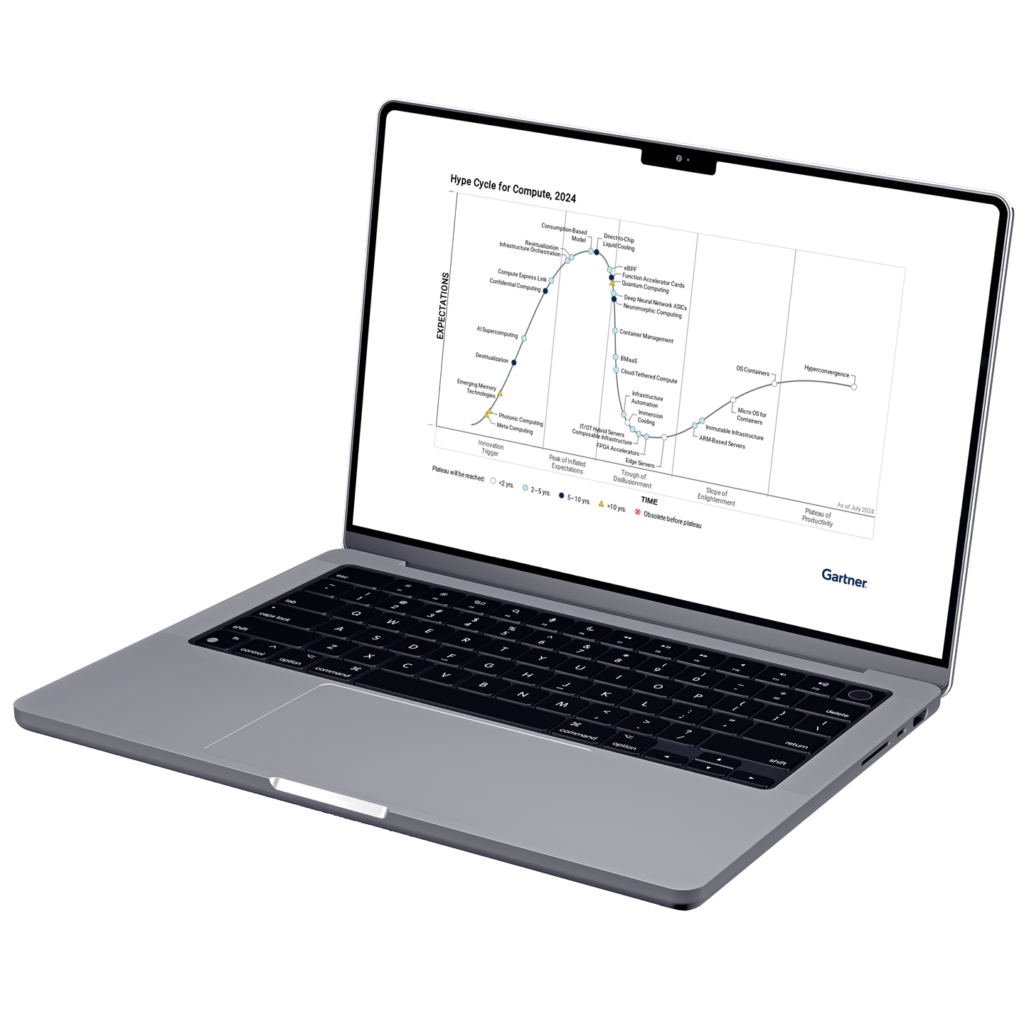

We provide exclusive access to the Gartner® Hype Cycle™ for Compute 2024 report. Learn how Photonic Computing can transform future business and society.